Introduction:

When it comes to the process industry, there are a plethora of operational challenges, but not a single standard technique that can address all of these. Some of the common operational challenges include identification of critical process parameters, control of process variables and quality parameters, and many more. Every technique has its own advantages and disadvantages, but to make its use at – its best, one should be aware of “What to use“, “And When”? This is really an important point to consider as there are so many different types of models that can be used for a “fit-for-requirement” purpose. So, let us try to have a deeper view of the modeling landscape.

Statistical models in the Process Industry

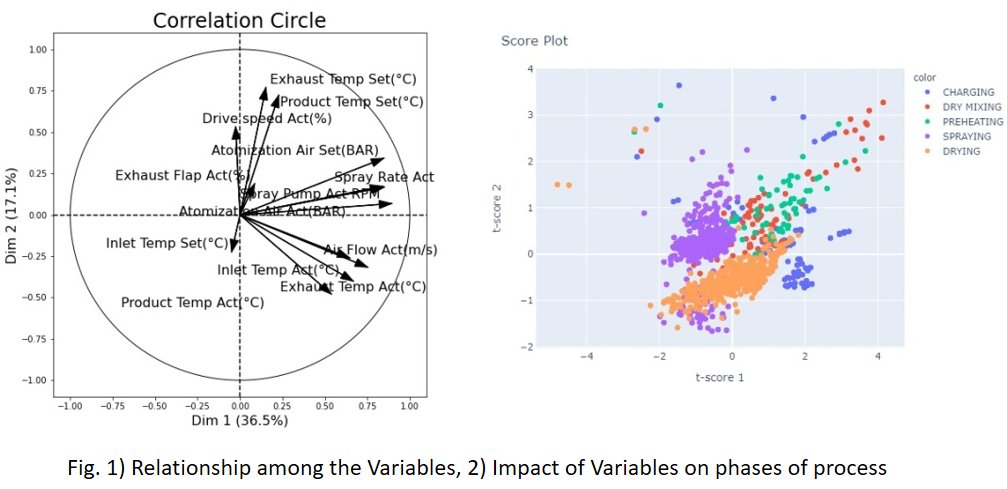

Statistical models are normally preferred when we are more interested in identifying the relationships among the process variables and output parameters. These kinds of models involve hypothesis testing for distribution analysis, which helps us to estimate the metrics, such as mean and SD of the sample and population. Whether or not, your sample is a generalized representation of your population? This is an important piece of concept which goes as the initial information for any model building exercise. Z-test, chi-squared, t-test (Univariate and multivariate), ANOVA, least squared and many other advanced techniques can be used to perform the statistical analysis, and estimate the difference in the sample and population dataset.

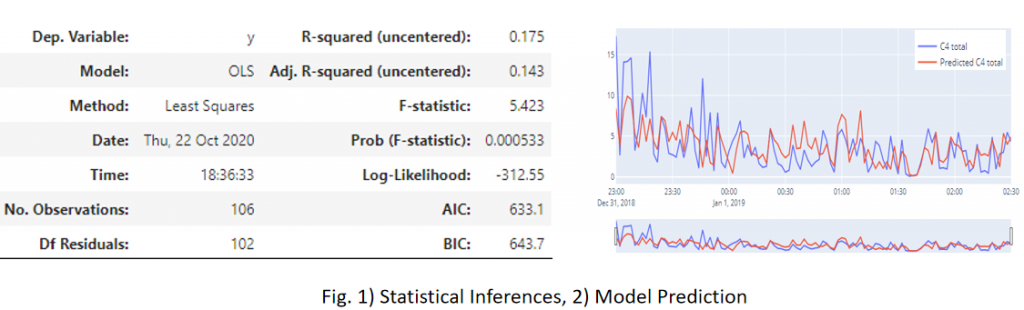

Let us try to understand this with an example: Considering the process of distillation, let’s say that you have the dataset available for its Feed Pressure, Flowrate, Temperature and Purity of the top stream. Now from its availability perspective, assume that you have 1 year’s worth of the data. Then, you segregated your dataset in some ratio, let’s go by 7:3 for training and testing purposes, and applied some model. But the model didn’t seem to comply with your expectations, in terms of the desired accuracy. So, what could have gone wrong? There are many possibilities, right? For the interest of this article let us just focus on the statistical inferences. So, since your model was not able to generalize its understanding on the distillation dataset, we may want to set an inquest for the dataset first. How? Did we compare the mean and SD of the train and test dataset? No? Then we should!! As discussed above, that to set up a model for reliable predictions, we need to be sure that the sample (In this case-training and testing dataset) is representative of your population dataset. This means that mean and SD values should not see much significant change in the above 2 datasets and also when compared to any random samples drawn from the historical population dataset. This also gives to us an idea about the minimum volume of the dataset which should be required to estimate the model’s robustness and predictability. Also to support the predictability confidence of any parameter on the output variable, we can use p-value. It essentially shows the statistical significance/feature importance of the input parameters.

Machine Learning Models in the Process Industry

So, now we have some idea on the importance of statistical models using process data. We can know the parametric relationship among each other and quality parameters. And then using the Machine learning models we can enable the predictions.

Typically, what we have seen in any process industry is, they need correlations and relationships in the form of an equation. A black-box model sometimes adds more complexity in the practical applications, as it does not provide any information on how and what was done to establish the prediction.

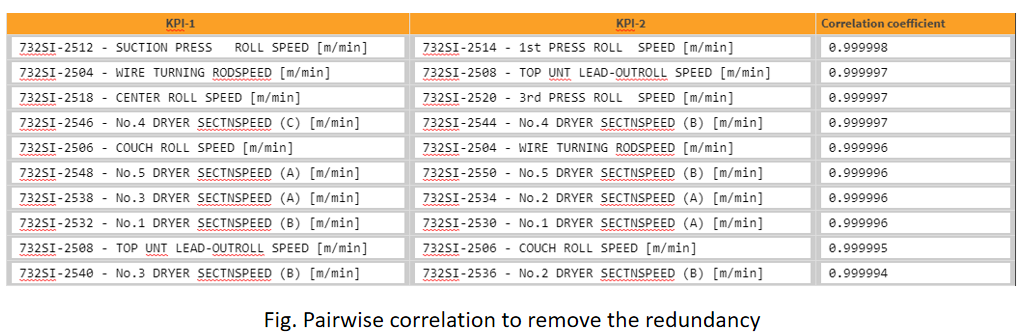

Sometimes even a model with 80% accuracy is sufficient if it provides enough information on the relationship is established among the process parameters. This being the reason, first, we always try to fit a parametric model, such as a linear or a polynomial model with the desired scale on the dataset. This atleast gives us a fair understanding of the amount of variability and interpretability contained in each of the paired parameters.

Conclusion

We can say that predictions and inferences/interpretability should always go hand-in-hand in the process industry for a visible and reliable model. The prediction itself is not sufficient for the industry, they need more, like – if the predicted value is not optimal or if it does not meet standard specifications, then what should be done? A recommendation or a prescription in the form of an SOP that the operator or an engineer can follow to bring the process back to its optimal state, which comes from the statistical inferencing. We suggest that for a strategic digitalization deployment across your organization, you use the best of both techniques.

To know more on how to use these techniques for your specific process application, kindly contact us.

Who We Are?

In case you are starting your digitalization journey or being stuck somewhere, we (Process Analytics Group) at Tridiagonal solutions can support you in many ways.

We run the following programs to help the industry along with various needs:

- We run the POV/POC program– For justifying the right analytical approach and evaluating the use cases that can directly benefit your ROI.

- A training session for upskilling the process engineer – How to apply analytics at its best without getting into the maths behind it (How to apply the right analytics to solve the process/operational challenges)

- Python-based solution– Low code, templates for RCA, Soft-sensors, fingerprinting the KPIs, and many others.

- We provide a team that can be a part of your COE– That can continuously help you to improve your process efficiency and monitor your operations on regular basis.

- A core data-science team (Chemical Engg.) that can handle the complex unit processes/operations by providing you the best analytical solution for your processes.

Written by,

ParthPrasoon Sinha

Sr. Data Scientist

Tridiagonal Solutions