In this article our focus will be primarily around cleaning of the data, for efficient analytics. Data cleaning is one of the most important step in the overall framework of data analysis/science methodology. Efficient data cleansing could result to a more accurate applied analytics. But, there is a fine line between the conventional data cleaning methods and data cleaning for process-manufacturing data. By rules, data cleaning gives to us a very simple and intuitive procedure to follow, if there is any missing, invalid, outlier,. etc values. You can remove that sample point, remove that entire feature if required, fill with some logical values and many more. Although, this brings to you the ease of applying the analytics, but does that solve your problem and concerns with the data integrity in process-manufacturing? The answer is no!!

Let’s start with data integrity, and it’s importance. The root of this term can lead you to various attributes and terminologies such as

- Accuracy,

- Completeness

- Consistency of the data throughout it’s entire time-cycle

Accuracy

Accuracy will point to the sensor’s correctness of the values, and the latency in collecting the signal’s value. It also help us in determining whether the values that is being stored is an outlier or not, and what corrective actions needs be deployed in order to make this step efficient. The estimated error between the actual value and the recorded value can also help you in devising a better control strategy depending upon the deviation between the set point and the recorded value. On the other hand, the latency in recording the sensor data will dictate and enable the operations team to sense the fault and take early actions to respond of the process in a desired way.

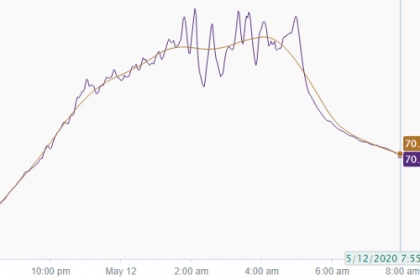

To enable predictive analytics for process improvement, both correctness and the latency plays a vital role. The developed model at the production scale confides heavily on the underlying data layer, which gives a sense about the importance of quality of the data being considered. Automating the data cleansing procedure will boost the process operations in mitigating the inappropriate dynamics of it, and create a metric for an enhanced control actions. One good example of this could be developing a golden batch profile around the critical signals of the process. This method will assume that the signal that’s been considered is highly accurate and is pulled to the display with very less delay in time, which will help the operator to take the necessary action on the near-real time without much loss in the overall economy of the process effectiveness. Another example of this could be a model predictive control, where the developed model depends and learns to take the actions, based on it past actions or, the data that it was exposed to. This reinforces the whole concept and demands for reconsideration of the entire underlying infrastructure that can increase the preciseness of the data captured.

Following are the possible use cases that could be applied if the data accuracy is considered to be precise:

1.Golden Batch profile for real time monitoring,

2.Dynamic modeling and optimization,

3.Continuous process verification,

4.Overall equipment effectiveness and reliability engineering

5.Equipment failure and performance prediction

Completeness

The term “Completeness” points to a very common issue of missing data or invalid data. The other-side interpretation of this could be to realize whether the data being considered is sufficient enough for understanding the process or not. To be honest, the conventional data wrangling and cleaning techniques is not a good practice to be undertaken while dealing with the process data. The domain knowledge or the SMEs know-how should actually be taken into consideration for playing around with the data. For example, removing a process feature(process parameter) should be logical, and it should not be a cause of some major process information loss. The missing cells in some particular feature cannot be always replaced with the average (or any similar logic) of the data-set, it must follow the protocol of time-series evolution of the process and should be a practical representation of the operations strategy. One key understanding which is easy to miss is the knowledge of the first principle models. You can never override the physics and the understanding behind the process.

It’s been observed many times that there are some process parameters in the data table which shows very less variance around it’s mean value, which pronounces the user to remove that feature without even considering the repercussions of it, where we(domain experts) know by first principle model that the removed feature is important. Instead, the first principle model should be considered to make a note on the process parameters that should be considered for modeling, in-order to capture the physics behind the operations accurately.

Consistency

The term “Consistency” itself suggests that uniformity of the data is also one of the key important attribute to be considered while cleaning the data. Inconsistency in the data-set could be an outcome of multiple possibilities such as time-varying fluctuations, variability, or noise. This could also arise if the data-set contains startup-steady-shutdown operations at one place, which sometimes is hard to model as most of the first principle models assume steady operations as the standard. Which point to the fact that if your data-set represents the overall transient and steady state operations then the model would require frequent tuning, optimization and management in the real-time. It’s intuitive that model management during transient operations would be more cumbersome than steady state one. Even the methods, approach, and solutions that underlies in the background of process analytics is different for both transient and steady state operations. Some of the opportunities in this domain could be,

- Minimizing the time of the transient operations,

- Smooth transition between these two operation strategy,

- Optimizing the process parameters,

- Grade transition for multi-grade products

Conclusion

It’s well observed that cleaning the data is not so straight-forward, as it will require the domain expertise, process evolution knowledge, process oriented logical filling and replacing of the values. Even dropping of a feature is determined by the first principle model. Even a feature attributed with low variance could be as important as others. The behavior of trends in data-set could be very different for transient and steady-state operations.

Which lets us to conclude that cleaning of the data is itself dependent on the process you are dealing with, nature and behavior of the same, and many more adhoc process attributes which needs to determined and considered at times. Cleaning the data might be a recursive step if the data-set does not seem to be a good representation of the process.

If you have missed out the previous article in this series which was about Data aggregation, then please visit this link which should guide us to reinforce some of the strong conceptual understanding behind data pre-processing in process-manufacturing.

I Hope this was helpful, and should bring to you some strong insights about the process understanding when using the data-driven approach to drive a solution.

Written by:

Parth Sinha

Sr. Data Scientist at Tridiagonal Solutions