In this article (3rd in series) our focus will be primarily on the necessary and sufficient volume of the data that will be required for efficient process/manufacturing analytics.

Few questions that should come to your mind before applying analytics:-

- Why volume of data is important to fulfil your analytics requirement?

- How to determine the required volume of data beforehand?

- What are the data attributes that link to your data volume requirements?

The answer to all such questions lies in the process/operation you are dealing with. By all means, the data should be a good representation of your process. It should speak for your process variability, process operations strategy, and everything that helps in understanding the process. Metadata, which is an excerpt of your data, should give you a salient insight about the process parameters and it’s operational/control limits. It should also give you sufficient information about the good/bad process, which ultimately dictates your product quality, be in terms of poor yield, rejected batches..etc. This information is very much important for developing the model in the later stage, where it needs to get trained on all the process scenarios that data can represent. For Eg: Your model will be capable of predicting the rejected batches, only if the data that was fed to it carried that information in itself.

Two Key important attributes for validating your data is:

- The amount of data(Identified using the start and the end duration of the data)

- Sampling frequency

The amount of data

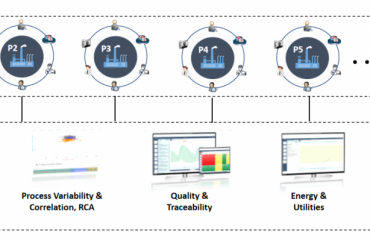

The answer to this is dependent on the kind of analytics you are interested in, such as predictive, diagnostics, monitoring..etc.

Monitoring: This kind of analytics requires near-real data, which needs to be controlled. The statistical limit to the identified signal is assumed to be estimated beforehand, either using using SPC, or it should come from the SME know-how.

Diagnostics: This layer of analytics is function of the historical data (minimum volume) that can give you the estimation of process variability. Identifying the parameters that can associate significantly with your product quality or output parameters. Sensitivity analysis of input space on the output parameters needs to be checked to validate the critical process parameters. Typically, 2-3 yrs of data is required to perform this analytics in process industry.

Predictive: The model developed in this stage is trained on the dataset, which assumes that the provided datset gives all the underlying information about the process. This also means that dataset must be carrying the important information about the uncertainties and abnormalities that happened in the past for efficient prediction. An important thing to note here is that the model will be able to predict all the scenarios of the process, it has learned (trained) from past using the historical data. If some uncertainties were missed out during the training the model, then again re-validation of the data, re-training of the model, tuning the model will be required. More and more data will give you a robust model, with better predictability. A minimum threshold of data, after which the model performance doesn’t vary significantly, should give you the right estimated volume of data.

Sampling Frequency

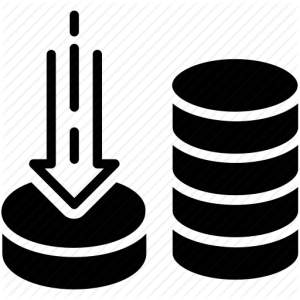

This parameter plays a very important role in analytics. The correct sampling frequency should result in a dataset which represents your process operations accurately. Maybe, with a higher sampling rate you miss out some important process information which could be critical for the model to understand for making better predictions. Dynamic and rapid operations can require the data to be collected at higher frequency (every 1 sec or 1 min). The collected data should be stored with minimum latency to a high performance database, for it’s efficient use in the analytics later. The performance of the database could be estimated using it’s data compression, asset structuring capabilities. It’s preferred to use Time series database (process historians) over SQL database for scalable and optimized analytics.

Conclusion

Both volume of data and sampling frequency plays a vital role in process analytics:

- Right volume of data should give justice to your model. Recommended approach is to compare and test the model’s performance on multiple volume of data, before finalizing the data-set. For Eg: If your model performs same on 1 yr and 2 yrs data, then 1 yr data should be sufficient for training the model.

- Sampling frequency should be selected so that all small process variability and uncertainties are captured and conveyed to your model appropriately. Eg: For less fluctuating operations sampling frequency of 5min, or even 1 hr should be competent, but with proper data validation of the same. On the other hand, process with large and rapid fluctuations in the parameters one might require data to be sampled at every few seconds or minutes.