In this article we will look into the importance of all attributes related to data for smart manufacturing and digital twin. We will also cover the importance of industrial maturity with respect to the IT infrastructure, and various initiatives that have been a major driving force for upgrading and up-skilling of the technology.

This series will cover the following:-

- Data Aggregation,

- Data Cleaning,

- Volume of datasets,

- Real time Analytics,

- Deploying your ML/AI solution to provide a industry-wide solution for ROI benefits

In this article we will focus on the first point which is “Data Aggregation”, and in the subsequent write-up will take the other topics each one by one.

The whole intent of this series is to establish a robust understanding of the pros and cons of Data Analytics and it’s implementation from a business model perspective. Which are those critical steps that carry the maximum potential to enable your organization with a huge business outcomes and time-savings.

Data Aggregation

Why?

Industry 4.0 has recently seen a major attraction in almost all the process-manufacturing sectors. A major digital initiatives drive has been ventured by many giant Oil&Gas, Pharma, Heavy metals and many other industries. Precisely, a clear need for most of these digital initiatives is either the lack of complete understanding of the process or the ROI value from the research programs they run for smart manufacturing. This requires an extensive programs to be initiated either for running a series of expensive experiments, or a very computationally expensive mechanistic simulations by solving the mechanistic models of the related process. A classic example for this could be like, setting up of a modeling environment for understanding the crystallization process or experimental runs for understanding the corrosion, erosion phenomenon. These initiatives suffer from two crucial drawbacks, which are, ending up with unsuccessful outcomes, or no major benefits in the investments, as it was meant mostly just for understanding and gaining the insights about the process and its parameters, and not directly for improving the process at the first place. This paves the way for replacing your existing techniques by various data-driven business models, as most of the limitations in the above mentioned initiatives gets eliminated here. It reduces the dependencies on layers such as mathematical models, experimental runs, expensive simulations, and brings the focus on “Data”, which is our new star.

Well, this does not mean that, now there will be no requirement of process understanding, but yes, it will definitely reduce the efforts and investments that went on capturing the insights using the utilities discussed above. But again, a big challenge that comes up at this stage is “Data” itself,

- Is your process data being captured on real-time basis?

- Do you store the historical data? Either in Process Historians or any similar database?

- Which all signals should be stored for better insight?

tagging, indexing, architecture,…, etc

How?

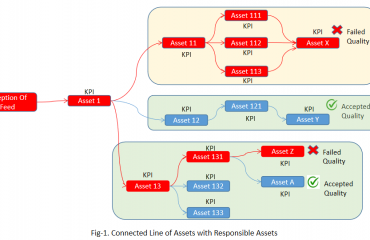

An important thing to understand here is that the basic underlying principle of any data-driven model is that “Your Data is the sole entity that speaks about your process performance”. Which means that anything that’s directly associated with data is important to us. Some of the factors could be the volume of the data, cleanliness of the data,..etc. A major gap we typically see today is that, most of the Industries have still not upgraded their internal infrastructure for data collection. They still operate on SCADA/DCS units, and not the Process Historians, which breaks the nexus of Process and digitization. The former is meant for the supervisory control as the name suggests by itself, and not for performing the analytics, whereas the later considers all the necessary requirements that can add value to your analytics. To name a few pros of Historians over SCADA are:-

- Can capture large volume of data (over 10–20 yrs),

- Handles basic data cleaning,

- Handles tagging and indexing of the signals,

- Can structure the data based on the assets,

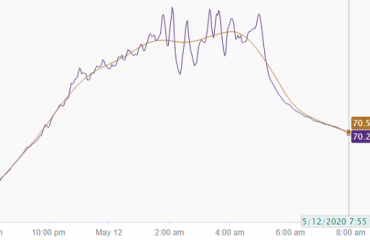

- Allows the user to have basic visualization of the historical trends

Although, this does not unveil all the stages(advantages and disadvantages) behind the curtain of Advanced Analytics, but yes, it enables and opens-up the gateway for you to perform it. Here, the limitation on the basic entity of data-driven approach is observed to get eliminated.

Now?

So far so good. Now, since your industry is able to capture and store the data in process historians, you should be good to get along with many digitization initiatives and approaches. The most suggested and logical way to go from here would be to define a use-case and tone down your focus on only those signals that are associated with it. This should bring clarity in many sense in defining the methods, Approach, and solution for your Business.

Our Process Analytics Group(PAG) from Tridiagonal Solutions could help you in establishing a successful and a wide range of solutions that could be leveraged in many of these digitization journey, right from data collection to advanced modeling and roll-out plan for deployment.

In the next article in this series we will discuss about importance of data cleaning and it’s impact on the solution. Stay tuned!!

I hope this article was helpful to you for establishing your approaches in digitization journey.

Written by:

Parth Sinha

Sr. Data Scientist at Tridiagonal Solutions